It may sound like something out of a science fiction novel, but thanks to researchers at the National University of Singapore (NUS), robots and human prosthetics may soon have a sense of touch similar or superior to that of human skin1.

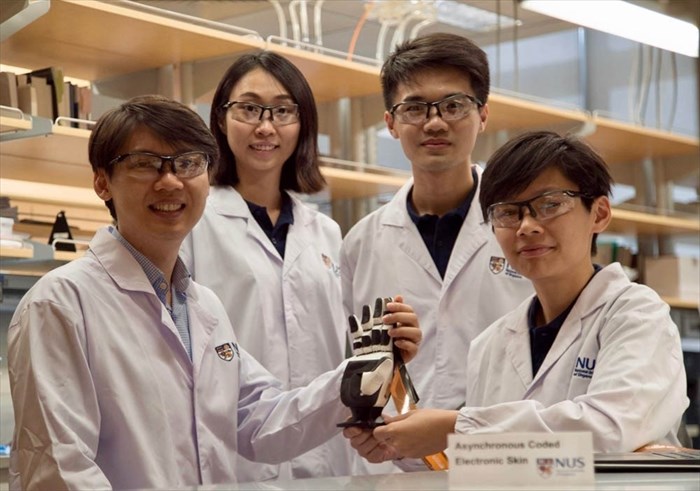

The newly developed technology which draws inspiration from the human sensory nervous system is referred to as Asynchronous Coded Electronic Skin (ACES). This electronic skin system is robust, exceptionally responsive and is compatible with any type of sensor skin layer, affording the robot or prosthetic in question a 'sense of touch' of its very own2.

How does the sense of touch work in humans?

The sense of touch is our largest sensory organ, spanning the entire surface of our bodies. Every day we use this sense to accomplish a myriad of tasks, keep our balance and move around in various spaces as well as interact socially.

A variety of sensory receptors help us to sense and respond to numerous stimuli, these are as follows3:

- Mechanoreceptors – these allow us to sense and respond to things like pressure, tension and vibration.

- Thermoreceptors – these help us to sense and respond to temperature ('thermo' means heat).

- Nociceptors – these ensure that we sense and respond to pain.

This network of sensory receptors exists not only in the skin, but is distributed throughout the body in the muscles, organs, blood vessels and bones. This complex system is collectively referred to as the somatosensory system.

In order for us to sense and respond to things when we touch them, the receptors located within the upper layers of our skin (the epidermis and dermis) must gather information from the environment and turn it into signals that the nervous system (i.e. the complex network of nerve cells and nerves that transmit signals to and from the brain and spinal cord to various parts of the body) can understand. These signals are relayed along the pathway of nerve receptors until they reach the brain. The brain is the 'master organ' that then interprets the signals and relays information back along the nerve pathways. This action enables us to respond to the stimulus.

So, for example, if we accidently touch a hot stove plate, our somatosensory system is engaged and relays the signal that the plate it hot. The brain interprets this signal, and relays signals that tell the hand to pull away from the potential danger in order to avoid a serious burn.

ACES versus the existing touch technologies

According to Assistant Professor Benjamin Tee who headed up the research team that developed ACES, robots do not currently feel things very well. By equipping intelligent androids and prosthetics with electronic skin (e-skin) that has a number of sensors capable of touch, they are able to work and collaborate with humans more naturally, manipulating objects in unstructured environments.

While touch-sensitive technologies do already exist, the current versions predominantly transmit tactile information from sensors in sequence. This can result in bottlenecks of data transfer that cause reaction delays. Another drawback is that these types of technologies also require complex wiring as the need for more sensors arises.

The newly developed technology employed in ACES detects and allows for the simultaneous transmission of thermal (temperature) and tactile (touch) data with fewer delays than conventional tactile technologies. This holds true even when using over 10,000 sensors, giving ACES an unrivalled capacity for scalability.

The team’s working prototype currently employs 240 receptors and a single electrical conductor to transmit information. It does this asynchronously, significantly reducing information delays while maintaining a responsiveness of under 60 nanoseconds, a speed that not only efficiently facilitates rapid tactile perception but also makes it the fastest electronic skin technology ever produced.

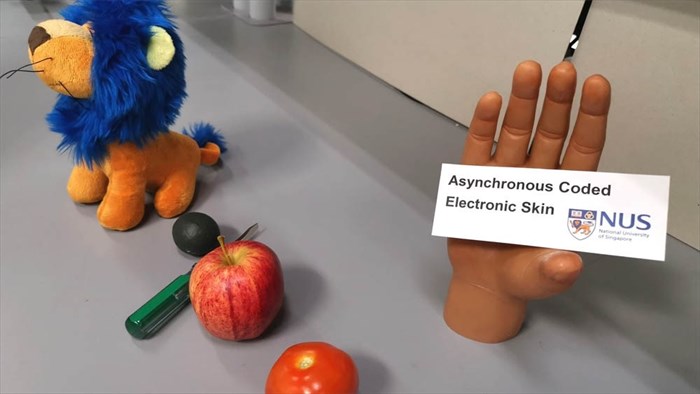

To put this into perspective for real-world applications, this means that ACES can sense touch in excess of 1,000 times the rate of the human somatosensory system. What’s more, when prosthetics or robotics are fitted with ACES-enable skin, they can accurately identify the texture, hardness and shape of objects at a rate of 10 milliseconds – that’s 10 times quicker than the blink of an eye.

ACES has also been engineered to resist physical damage, something that is extremely important when it comes to electronic skins as, like our own skin, they frequently come into contact with the physical environment, making them vulnerable to wear and tear.

While existing technologies employ interconnected sensors, all ACES sensors have the ability to connect to a single conductor, allowing them to operate independently of one another. This means that even if sensors break, if one remains functional, the entire system will continue to work, making it less vulnerable to damage.

Using e-skins for robots and human prosthetics

It is hoped that the ACES’ design which marries simple wiring with astounding responsiveness even when the number of sensors increases will enable the rapid scaling of intelligent e-skins for use in AI (artificial intelligence) applications.

Its creators have designed it to pair with any type of sensory skin layer. The most notable of which is their own newly developed water-resistant, transparent, self-healing e-skin. Not only does this e-skin heal on its own, much like our own skin, but it also gives a more natural appearance to prosthetic limbs while restoring disabled individuals sense of touch. Something most could only dream of.

The team believes that their technology also has a variety of other applications including use in intelligent robot designs that may be developed to undertake disaster recovery or even perform routine tasks like packing in production lines.

In the subsequent round of research the team will look at applying these technologies to more advanced androids and human prosthetics.

References

1. New e-skin innovation by NUS researchers gives robots and prosthetics an exceptional sense of touch. EurekAlert!. https://www.eurekalert.org/pub_releases/2019-07/nuos-nei071719.php. Published 2019. Accessed July 19, 2019.

2. Lee W, Tan Y, Yao H et al. A Neuro-Inspired Artificial Peripheral Nervous System For Scalable Electronic Skins. 1st ed. American Association for the Advancement of Science; 2019. https://robotics.sciencemag.org/content/robotics/4/32/eaax2198.full.pdf. Accessed July 19, 2019.

3. McGurrin P. Feeling Touch | Ask A Biologist. Askabiologist.asu.edu. https://askabiologist.asu.edu/explore/how-do-we-feel-touch. Accessed July 19, 2019.